AIITK:: Text Generation Voice Transcription Text-To-Speech Image Generation

Whisper

Whisper is designed to transcribe spoken words into text. This API is particularly useful for converting voice recorded at runtime into text to be sent to ChatGPT.

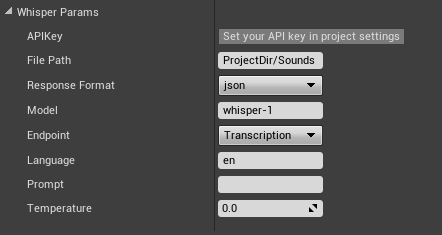

- WhisperParams

- APIKey: Key for OpenAI API authentication. Set this in the project settings.

- FilePath: The path where the audio file is located, relative to the project directory. Format example: "YourProjDir/Sounds/YouVoice.wav".

- ResponseFormat: The format of the transcript output. Possible values include json, text, srt, verbose_json, or vtt. Defaults to json if not specified.

- Model: ID of the model to use for transcription. Currently, only "whisper-1" is available.

- Endpoint: Specifies the action to be performed. In this case, "Transcription" indicates that the audio file will be transcribed.

- Language: The language of the input audio in ISO-639-1 format. Providing the input language can improve accuracy and latency. This parameter is optional.

- Prompt: An optional text input to guide the model's style or to continue a previous audio segment. The prompt should match the language of the audio.

- Temperature: A value between 0 and 1 that sets the sampling temperature. Higher values make the output more random, while lower values result in more focused and deterministic output. Defaults to 0, which lets the model automatically increase the temperature based on log probability thresholds.

Voice Transcription

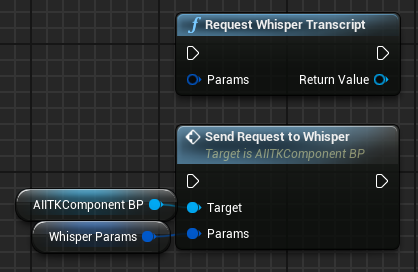

RequestWhisperTranscript/SendRequestToWhisper (AIITKComponentBP)

The RequestWhisperTranscript function takes a given .wav file and sends it to the Whisper API to transcribe into text.

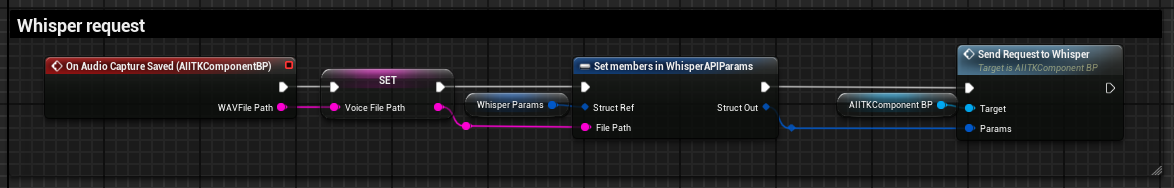

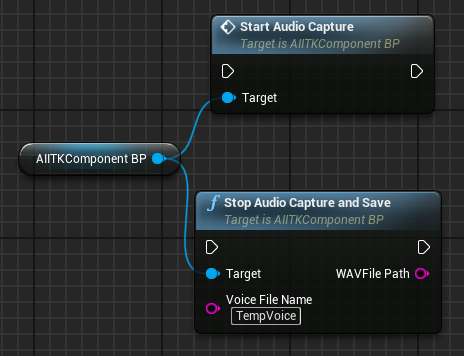

Recording/Saving Audio

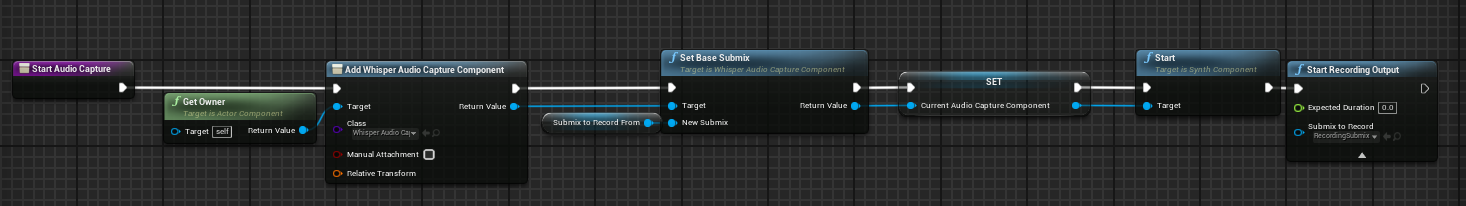

To send the audio to get transcribed you first need a way to record the audio. These are the functions you will need to save your voice (or whatever you want) to the disk. I would recommend using the functions in AIITKComponentBP to start and stop audio capture for quick integration. AIITK utilizes the default Audio Capture plugin that’s now included with recent versions of Unreal. You can learn more about that here: Submix Overview

*With StopAudioCaptureAndSave you only need to supply a filename, the default WAVFilePath output is YourBaseDirectory/Sounds/YourFileName.wav

TheAIITKComponentBP uses RecordingSubmix by default. This submix essentially mutes the game audio and only allows the default recording device input (typically the same as your Windows default) to be heard on this track.

Recording audio is mostly the same process as you would find with the Audio Capture plugin as it relies on it. You will find a WhisperAudioCaptureComponent subclass that clears up some naming confusion and allows you to easily set the desired submix if you need an alternative recording method.