AIITK:: Text Generation Voice Transcription Text-To-Speech Image Generation

ChatGPT

Large language models are powerful tools for simulating characters but that only touches the surface of what it can do to enhance digital experiences. Utilize not only text generation but functions to expand AI behavior and capabilities by simulating function calls or allowing ChatGPT to call your own external APIs by creating Json schemas.

- GPT Parameters

- Messages

- Role: Choose your message type.

- Name: Name of the entity that is associated with this message i.e. ChatBoy. (I don't think spaces are allowed here)

- User Message: Can send text or images to ChatGPT for processing.

- Assistant Message: The message that ChatGPT Sent to the user.

- Tool Message: Message returned from a tool that ChatGPT used to complete a response.

- Raw Message: Send a message formatted in json if so desired.

- RequiredParams

- Model: Name of the GPT model for generating responses. Use the Get GPT Models function to see what models are available to you.

- APIKey: Key for OpenAI GPT API authentication. Set in Project settings->Plugins->AIITKDeveloperSettings. Or use SetAPIKey.

- Endpoint: GPT API URL. Default "https://api.openai.com/v1/chat/completions".

- Stream Response: Boolean indicating if responses should be streamed. Use OnChunkReceived for handling responses when this is true.

- Advanced Params

- MaxTokens: Max number of tokens in response. Default -1, no specific limit.

- Temperature: Controls randomness of response. Range 0-1.

- TopP: Top probability for token selection. Default 1.0.

- N: Number of completions to generate. Default 1.

- Seed: Sets a seed for deterministic outputs. Default -1 for random.

Like Minecraft?! - Stop: Sequence at which the model stops generating further tokens. (Need to update to array of strings)

- Presence Penalty: Penalizes new tokens based on their presence in history.

- Frequency Penalty: Penalizes new tokens based on their frequency in history.

- Logit Bias: Adjusts likelihood of specific tokens appearing.

- Log Probs: Do you want to generate log probability info with your response?

- Top Log Probs: An integer between 0 and 20 specifying the number of most likely tokens to return at each token position, each with an associated log probability. Logprobs must be set to true if this parameter is used.

- Response Timeout: Time in seconds before the API stops waiting for a response.

- Response Format: An object specifying the format that the model must output. Compatible with GPT-4 Turbo and all GPT-3.5 Turbo models newer than gpt-3.5-turbo-1106. Setting to { "type": "json_object" } enables JSON mode, which guarantees the message the model generates is valid JSON.

- User: Identifier for the user making the request.

- Tool Params

- Tool Choice

- Function Call Mode: Let ChatGPT choose which tool to use, specify a certain tool, or none at all. Use null option to skip adding tools to request entirely.

- Specific Function Name: The name of the specific tool you want ChatGPT to use.

- Tools: Array of tools that GPT can use to do work. See Tools section to learn more.

- Tool Choice

- Messages

Text Generation

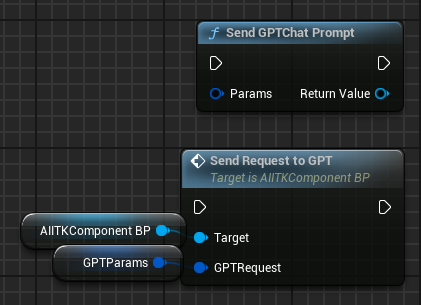

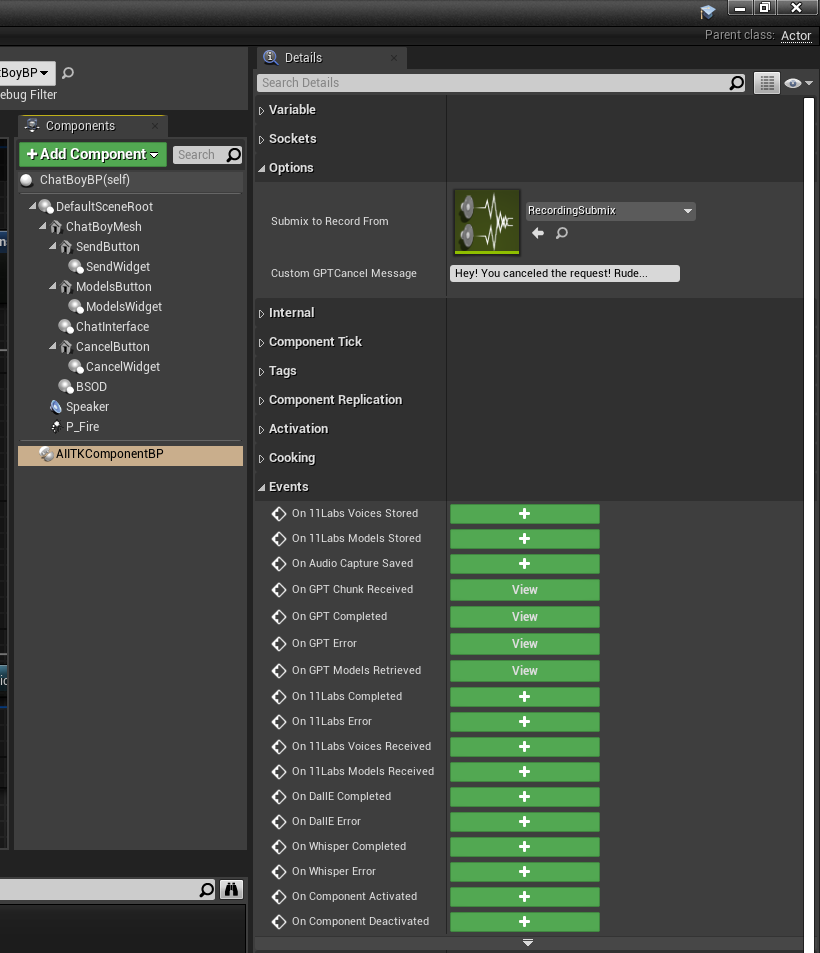

SendGPTChatPrompt/SendRequestToGPT (AIITKComponentBP)

The SendRequestToGPT function is used to send a chat prompt to the GPT API while also providing the context of previous messages exchanged in a conversation. This function is particularly useful when you need the AI model to maintain context and generate responses based on prior interactions.

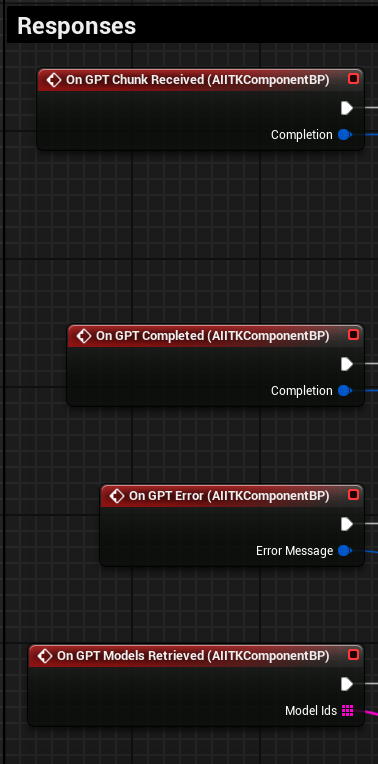

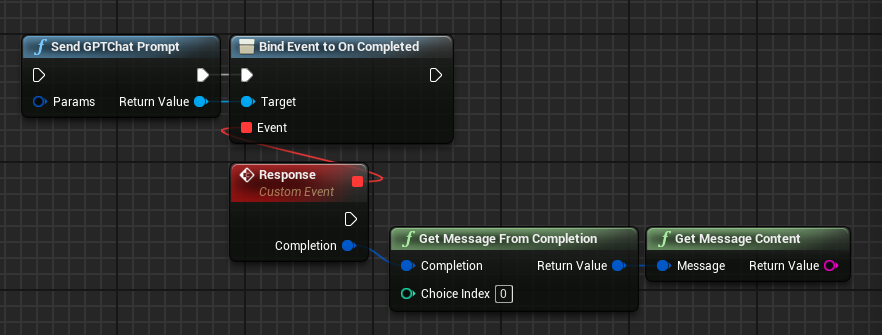

Handling Responses

As mentioned before, you can either bind to the response event manually or use the AIITKComponentBP to access a list of events that execute when the response is received.

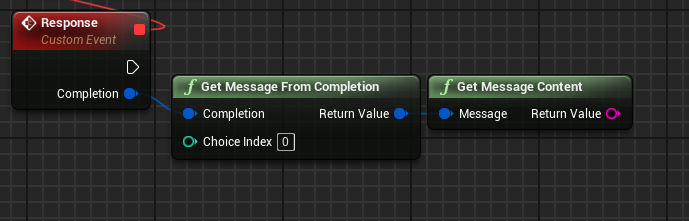

GetMessageFromCompletion/GetMessageContent

Use these utility functions to easily retrieve the content string from the selected choice index (Should be 0 if N = 0).

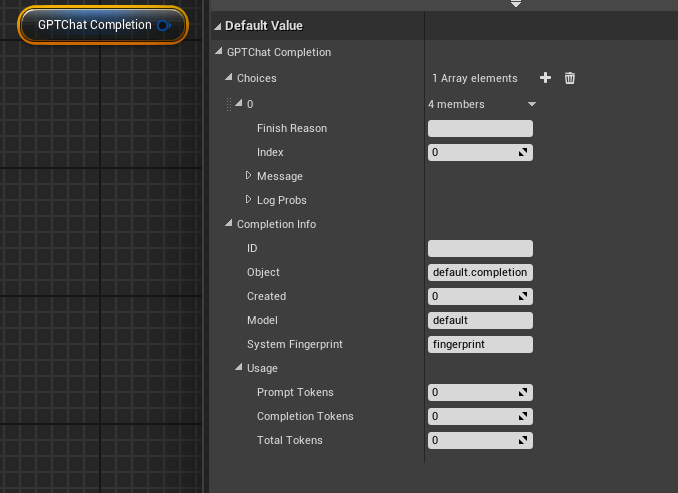

GPTChatCompletion (Struct)

The GPT Completion struct is what AIITK will parse data into that ChatGPT generated. You can receive this at the completion event.

Tools

Tools are pre-made operations that ChatGPT can use to do work. For example, calling a function to pick from a list (Enum) of objects to pick up that are in the NPC's field of view, or formatting user-given information into a Json object to send to a weather API.

In the context of game development here are some highlights on how utilizing function calls could be useful to you:

Customization and Control: It allows developers to pass a set of functions defined in the functions parameter along with the user query to the model. This enables the creation of more dynamic and interactive game environments, as the game can respond to player actions in real-time with customized outcomes based on the functions called.

Enhanced Interaction: The function call feature enables the ChatGPT model to call specific functions if it needs assistance to perform tasks. In a gaming context, this can mean more intelligent NPC interactions or context-aware events triggered by player actions, which are not predefined but generated in real-time based on the game's current state.

Structured Data Retrieval: Function calling can be used to get structured data from the model more reliably. For example, in a game, this could be used to fetch real-world data (like weather) or game-specific data (like player stats) and incorporate it into the game world, making the experience more immersive and responsive.

Integration with External Tools and APIs: Developers can describe functions to the ChatGPT model, which then intelligently outputs a JSON object containing arguments to call those functions. This feature allows for seamless integration of GPT's capabilities with external tools and APIs, offering a wide range of possibilities for enhancing game mechanics and interactions.

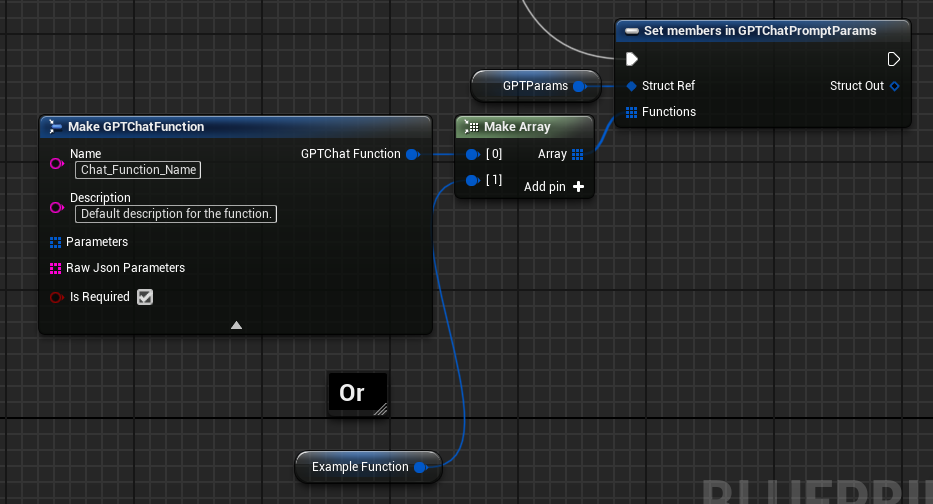

Constructing Functions For ChatGPT (Json Schemas)

There are a few ways you can construct Json schemas for ChatGPT to process. Don’t worry if you’ve never worked with Json format before, AIITK tries its best to help you construct valid Json schemas for use with ChatGPT by just filling in the necessary fields.

You can make functions as simple or complex as you want, for example; if you want to give your GPT configuration access to the internet via an external API, just take the response processing one step further. Here’s a general outline for this flow:

1. Create a JSON schema synonymous with an external API's request parameters (a common example is a weather API).

2. Send the function (Json schema) to ChatGPT manually or automatically.

3. When the function is called by ChatGPT, send the response JSON to the weather API.

4. Get the response JSON object from the weather API.

5. Send the weather API response back to ChatGPT to automatically respond with the weather based on user input context.

It’s difficult to imagine all of the possibilities you can enable with function calls to ChatGPT. The examples provided here are fairly abstract, take a look at the examples included in the plugin’s content folder for more specific use cases.

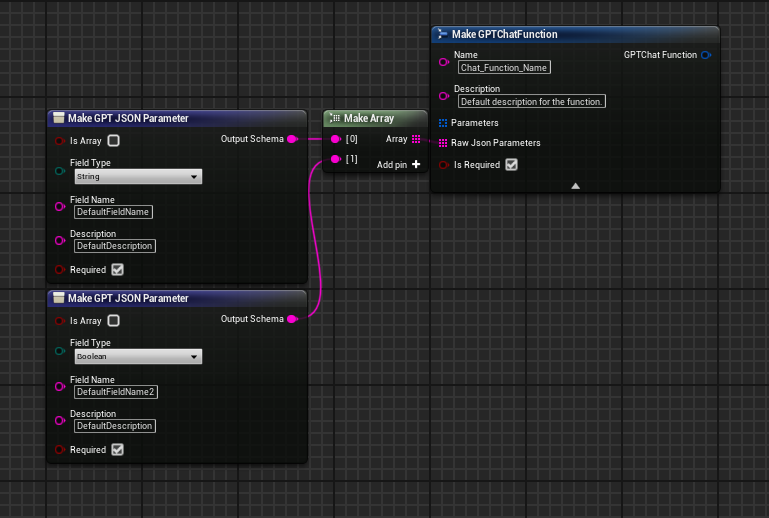

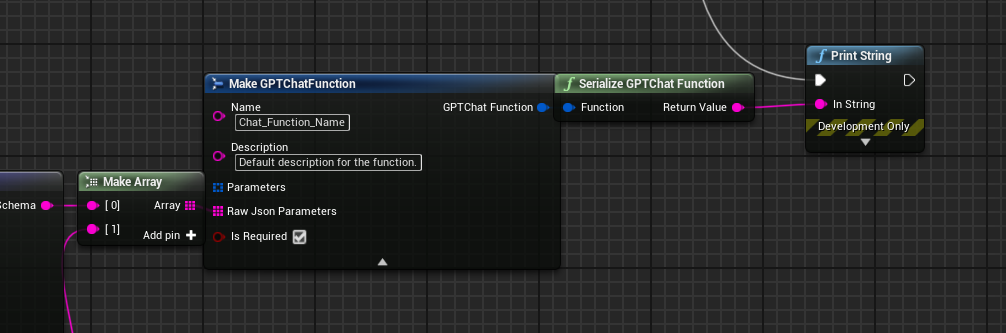

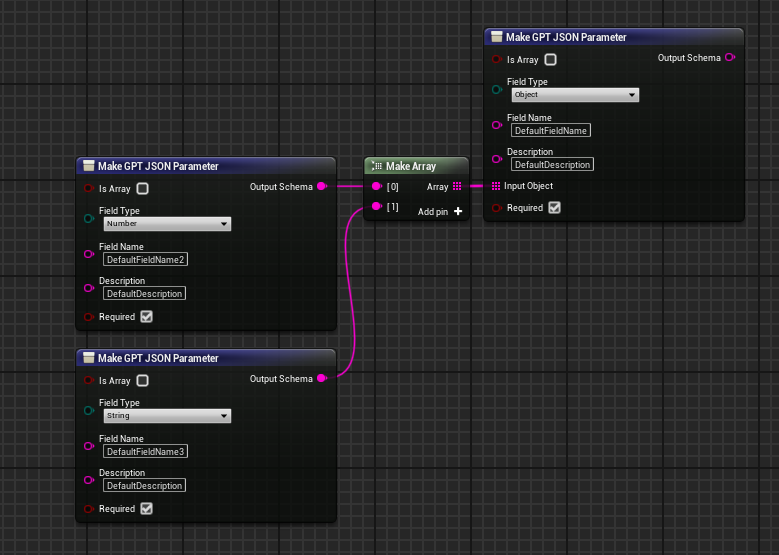

MakeGPTJsonParameter

Make GPT JSON Parameter Is a custom node designed to give you only the relevant input data for the parameter type you are intending to construct. The function outputs the schema as plain text so you can double-check the output manually if you need to. Input the parameters from this function into the “Raw Json Parameters” pin. This pin accepts any number of plain text Json schemas (Function parameters), feel free to construct schemas manually if you desire.

*Use Serialize GPT Chat Function to double-check for formatting errors of the entire function

*Connect MakeGPTJSONParameter nodes together to nest object parameters:

"DefaultFieldName": {

"description": "DefaultDescription",

"type": "object",

"properties": {

"DefaultFieldName2": {

"description": "DefaultDescription",

"type": "number"

},

"DefaultFieldName3": {

"description": "DefaultDescription",

"type": "string"

}

},

"required": ["DefaultFieldName2", "DefaultFieldName3"],

"RequiredField": true

}

*Mix and match BaseObject derived functions, Make GPT JSON Parameter functions, or even manually constructed json schemas as a string if desired.

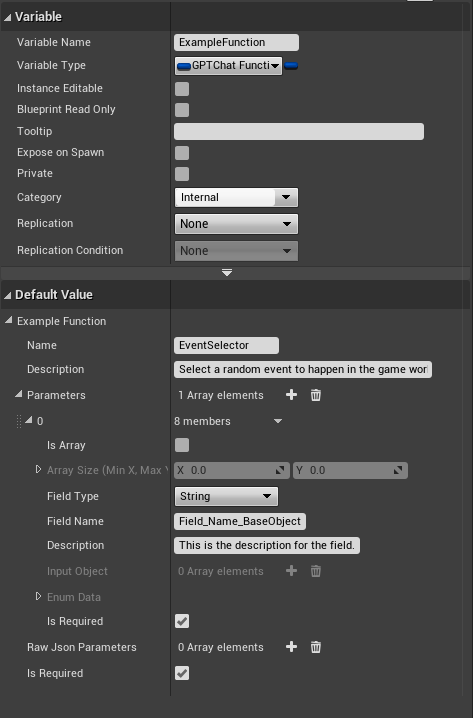

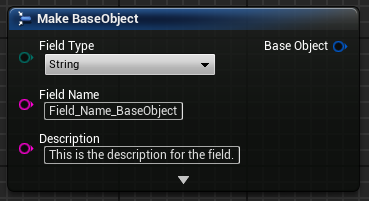

BaseObject (Struct)

This is the base struct that the GPTChatFunction struct uses to add your function parameters to the main function object. Typically you wouldn’t construct this in the event graph. Intended usage is to make a GPTChatFunction variable and fill in the parameters in the details panel. This is probably the most straightforward way to construct your functions but does not allow for object hierarchies deeper than 3 levels. If you need to have functions nested deeper than that please utilize the MakeGPTJsonParameter function and simply set the members in your GPTChatPromptParams Struct.

*This is one way to add your functions to the GPT request at runtime, add more than one if needed

Example Output:

x1 param, x1 function (Before full request construction):

{

"name": "YourFunctionName",

"description": "Description of your function or instructions for GPT",

"parameters": {

"type": "object",

"properties": {

"ExampleEnumParam": {

"description": "Description of data or instructions for GPT",

"type": "string",

"enum": ["None", "BSOD", "ColorChange", "Whatever_You_Want"]

}

},

"required": ["ExampleEnumParam"]

}

}

x3 params, x2 functions (Full request):

{

"model": "gpt-3.5-turbo-1106",

"messages": [

{

"role": "system",

"content": "Pretend you are a retro computer console called \"Chat Boy\" and not an assistant, you speak robotic and snarky and don't know anything past 1995. If the user asks what you can do or anything related to your abilities, run the RandomEvent function. You are not an assistant, and not helpful."

},

{

"role": "user",

"content": ""

}

],

"stream": true,

"functions": [

{

"name": "ExampleFunction1",

"description": "Default description for the function.",

"parameters": {

"type": "object",

"properties": {

"DefaultFieldName": {

"description": "DefaultDescription",

"type": "string"

},

"DefaultFieldName2": {

"description": "DefaultDescription",

"type": "boolean"

}

},

"required": ["DefaultFieldName", "DefaultFieldName2"]

}

},

{

"name": "ExampleFunction2",

"description": "Select a random event to happen in the game world",

"parameters": {

"type": "object",

"properties": {

"Field_Name_BaseObject": {

"description": "This is the description for the field."

}

},

"required": ["Field_Name_BaseObject"]

}

}

],

"function_call": "auto",

"max_tokens": 4000

}

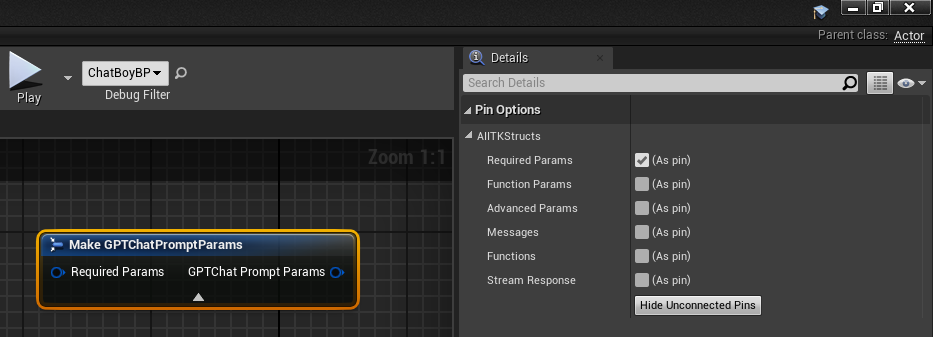

Tip:

With a structure node selected (Make, break, etc), look in details to hide unused pins to keep your space tidy.

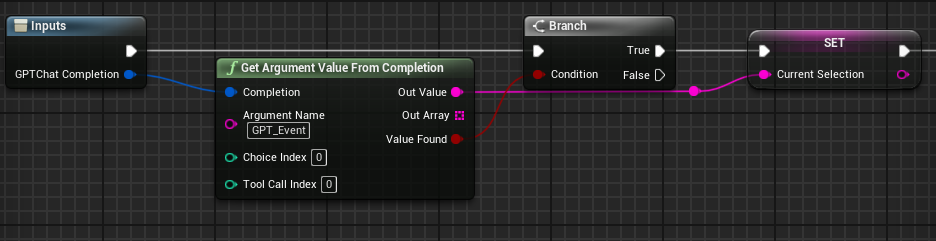

Parsing Function Responses

GetArgumentValueFromCompletion

Parses the arguments from the ChatGPT function call response and subsequently retrieves the needed value from the map it generates. The parameter name you sent GPT is used as the argument name in the json structure it returns, in other words, the parameter you want ChatGPT to generate should be the same name as the argument(s) in the function call response.

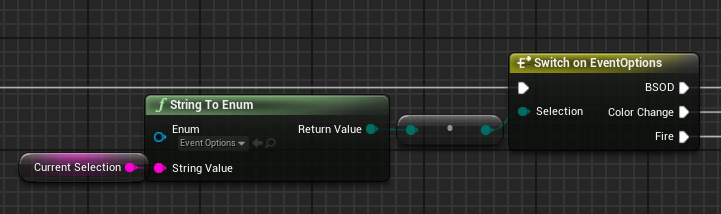

Bonus:

If you are expecting an enum “selection” to be returned, use String To Enum along with a Byte To Enum conversion to utilize switch/select nodes, useful for organizing predefined execution pathways.